Its fun when you find something new, and in R that can happen a LOT. MS SQL was frustrating in the fact that back in the day you would wait years for a single feature to come out then another decade for MS to get their shit together and finish the feature. Column store was a great example of taking years to get a feature released incrementally over several versions. Keep in mind CCI(Column Store) is my absolute favorite thing ever done in SQL, and it happens to be in Hadoop as well as ORC and Vectorization, same guy pm’d and wrote both in case you are interested. Open source does not suffer from this so you can frequently get new features added or entirely new packages that solve problems and give you the ability to explore data and models in ways you never considered. I stumbled upon one such package yesterday.

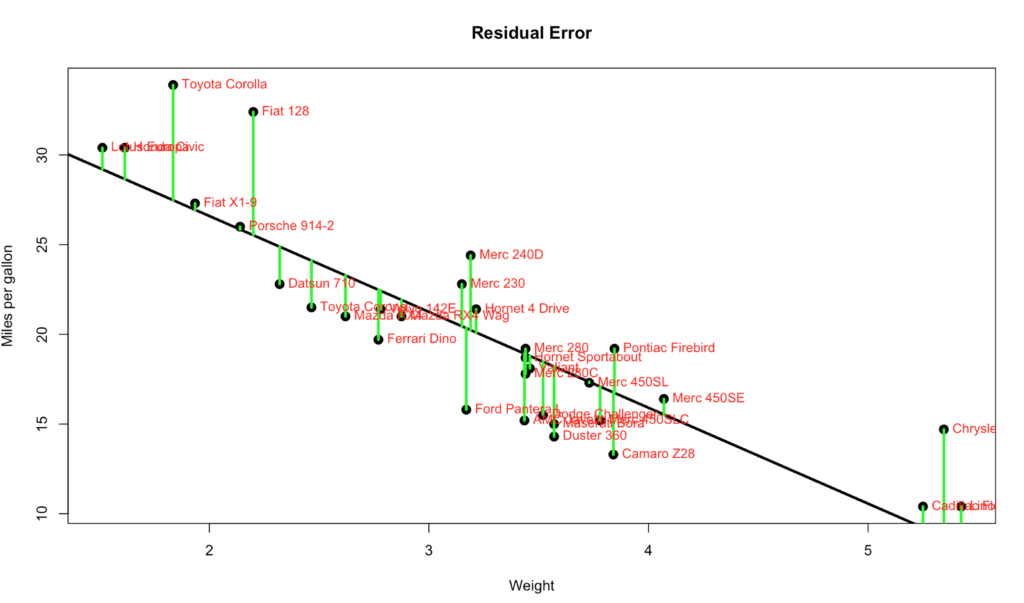

olsrr appears to have been released late last year, so i am pleased did not live out in the wild for too long before i found it. The last post having been on forward and backward stepwise regression, olsrr is the perfect continuation of that post. Not surprisingly the author used the exact same data set i did for his samples, mtcars. With that there is nothing i can really add. Here is the link to the Variable Selection Methods.

I like the detail that the package goes into when explaining stepwise. Stepwise is awesome, but one thing i skipped over that is covered at the very top of the post is the dimensionality problem that can occur with stepwise. I used a total of 10 variables int eh trained model, that equates to 210, which is a lot, and its super slow to calculate that many models. What if your model had 100 variables and 100,000 rows in the training set? You are not going to do that on your laptop, but this will happen and you will solve it, scale out is your friend in this case.

The more you read, the more you experiment and follow along, and the more data you try these features out on the better you will get and the better you will understand.